While the peer review process is the bedrock of modern science, it is notoriously slow, subjective, and inefficient. This blog post explores how Large Language Models (LLMs) can be used to re-imagine the review architecture, augmenting human expertise to build a system that is faster, more consistent, and ultimately more insightful.

A New Architecture: The LLM Triumvirate

Imagine a process where, upon submission, a paper is not sent to a handful of human reviewers but is instead analyzed by a triumvirate of specialized LLM personas. Each is given a specific, adversarial role, designed to stress-test the paper from a different critical angle. Their outputs are not meant to be the final word, but rather a structured, multi-faceted dossier for a human Associate Editor.

The three personas would be:

- The Guardian: This persona is the fierce defender of the status quo. Programmed with a prompt to act as a deeply skeptical expert whose own work is adjacent to the submission, its goal is to kill the paper. It relentlessly seeks out methodological flaws, unsupported claims, and logical inconsistencies. It asks: “Is this work fundamentally wrong? Does the experimental evidence truly support the conclusions?” The Guardian’s review is an adversarial stress test, ensuring that any work that passes has been rigorously vetted for its structural integrity.

- The Synthesizer: This is the curious, conscientious reviewer. Its prompt encourages it to take a panoramic view of the entire research space. Its primary function is to understand the work’s relevance and place it in the broader context of the field, even if the authors have done a poor job themselves. It performs a comprehensive literature search to evaluate claims of significance and contribution. It asks: “Where does this fit? Is this an incremental improvement, a dead end, or a potential bridge to a new area? Is this a solution in search of a problem, or does it address a genuine need?” The Synthesizer provides the contextual map against which the paper’s journey can be judged.

- The Innovator: This persona is hyper-focused on a single question: novelty. It is laser-focused on the core idea, tasked with determining if it has been done before. It scours academic papers, pre-prints, patents, and even obscure technical reports to find prior art. It deconstructs the paper’s contribution to its most essential elements and asks: “Is this core insight truly new? Are the claimed figures of merit impressive enough to justify the added complexity?” The Innovator is the ultimate guard against reinvention and trivial advancements.

Detailed prompts for the above three are in the separate Google doc. These personas are inspired by Karu’s satirical take on what reviewers do and constructively using it.

From Inconsistent Prose to Structured Data

Crucially, these LLMs would not produce rambling, unstructured prose. Instead, they would populate a standardized review form, directly addressing the canonical reasons for rejection: Is it wrong? Is it novel? Is the methodology sound? Are the conclusions supported? This transforms the review from a subjective essay into a structured data set.

The Associate Chair/Area Chair, a human expert, is now elevated from a wrangler of tardy reviewers and a decoder of conflicting opinions to a true arbiter. They receive a single, coherent dossier containing three distinct analytical viewpoints: the Guardian’s rigorous critique, the Synthesizer’s contextual placement, and the Innovator’s novelty assessment. Their role shifts from administrative oversight to high-level intellectual synthesis. They can weigh the Guardian’s concerns against the Synthesizer’s view of the potential impact and make a holistic, evidence-based decision in a fraction of the time.

This model directly addresses the core failures of the current system. It eliminates the months-long delays. It removes the biases of personal and institutional rivalries. It ensures every paper receives a deep, multi-modal analysis, not a superficial glance. While LLMs can “hallucinate,” the adversarial nature of the three personas provides a built-in error-checking mechanism, and the final verification of critical claims remains the editor’s responsibility.

This is not a call to replace human intellect but to augment it. We are not outsourcing scientific judgment to a machine; we are using machines to perform the exhaustive, time-consuming analytical work that currently burns out our best researchers. By doing so, we free human experts to focus on the irreducible tasks of final judgment, strategic direction, and creative insight. Science is accelerating. It is time our process for validating it does, too.

A crucial question in deploying LLMs for peer review is how to ensure they are knowledgeable about the most recent scientific literature, which is essential for evaluating a submission’s novelty and contribution. One existing advantage is that models like Gemini are trained on vast datasets that include publicly accessible scholarly articles, such as those from the ACM Digital Library. This provides a strong foundation in computer science literature, although it may not cover all relevant venues, like paywalled IEEE publications. In a fully deployed system, this gap could be addressed through a Retrieval-Augmented Generation (RAG) framework. Such a system would allow reviewers or authors to upload relevant PDFs, giving the LLM direct access to the specific context and related work necessary for a thorough evaluation.

However, there are inherent limitations to what we can currently expect from an LLM in this domain. Without a specialized system, it is a significant challenge for a general-purpose LLM to independently search for and identify the most closely related or missing works from a manuscript’s literature review. While a highly advanced, “agentic” AI might one day perform this kind of deep, investigative research, such systems are likely too expensive and complex for practical use in the near term. Therefore, the current approach must still “assume” a degree of honest and comprehensive work from the authors. The “Guardian” prompt framework mentioned earlier serves as a practical, lightweight method to guide the LLM in verifying the claims and context provided by the authors, leveraging the knowledge it has without over-relying on a research capability it does not yet possess.

Results

Nothing in architecture is complete without results and stacked bar charts. Here are three reviews of one of my own research papers. I’d challenge you to write a better review than any of these. They were all completely LLM generated with no human editing whatsoever.

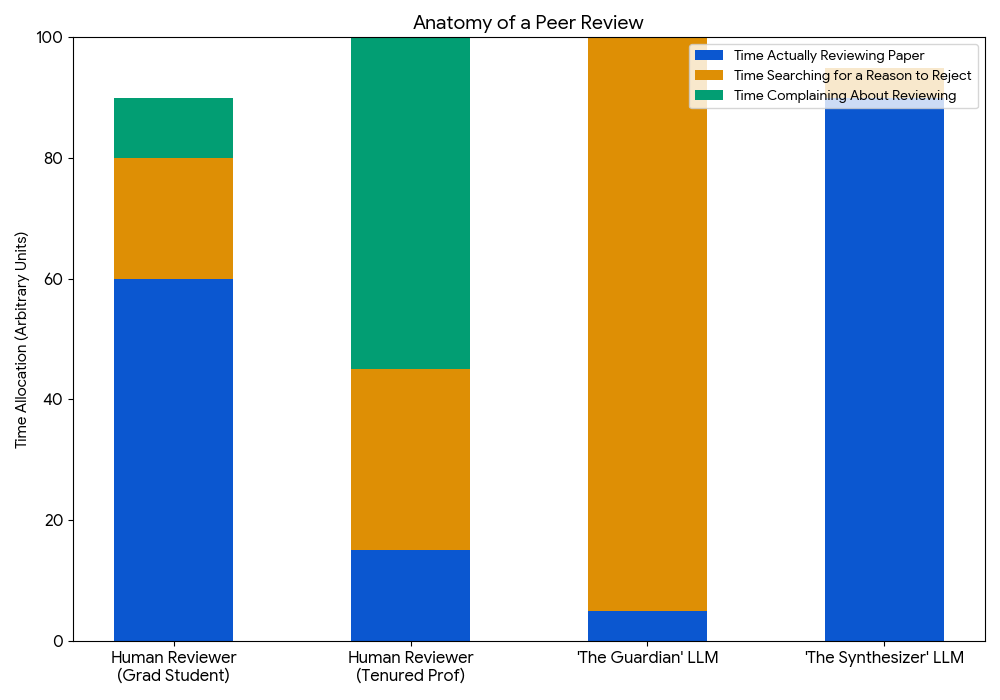

And a gratuitous stacked bar chart that makes no sense but checks the box of results.

Future Work

This begs the question, why not automate this even further with a new model of instant review, revision cycle? It could work as follows:

- Initial Paper Submission: Authors would submit their paper draft to a dedicated submission system (Hotcrp, openreview etc.), much as they do currently. This initial submission would be the first point of interaction with the new system.

- Instantaneous LLM-Generated Reviews: Upon submission, the system would immediately trigger an LLM-driven review process based on the 3 prompts above, providing a comprehensive set of initial comments and suggestions. This immediate feedback loop would allow authors to gain preliminary insights without the typical waiting period associated with human review.

- Author Revision and Resubmission: Authors would then have a dedicated period, for instance, one week, to incorporate the LLM-generated feedback. This phase is crucial for iterative improvement, allowing authors to address identified concerns proactively. Alongside their revised manuscript, authors would submit a cover page meticulously detailing how each of the LLM’s concerns was addressed and a revision document clearly showing all changes made to the paper. This transparent approach ensures accountability and facilitates subsequent human evaluation.

- Human Decision-Making: The final decision-making authority would remain with human experts, such as human reviewers or an Area Chair. Their role would shift from initial comprehensive review to a more focused assessment: evaluating the quality of the revisions, the author’s response to the LLM feedback, and the overall scientific merit of the now-refined paper. This approach allows human reviewers to concentrate on higher-level judgment and nuanced evaluation, leveraging the LLM’s efficiency for initial screening and detailed, preliminary critique.

This hybrid approach promises to significantly reduce the turnaround time for initial feedback, empower authors with tools for self-improvement, and optimize the valuable time of human reviewers, ultimately leading to a more efficient, transparent, and responsive peer review ecosystem.

Strong Reject?

About the Author: Karu Sankaralingam is a Professor at UW-Madison and Principal Research Scientist at NVIDIA. He is an IEEE Fellow and holds the Mark D. Hill and David Wood Professorship at UW-Madison. He is a recipient of the Vilas Faculty Early Career Investigator Award in 2018, Wisconsin Innovation Award in 2016, IEEE TCCA Young Computer Architecture Award in 2012, the Emil H Steiger Distinguished Teaching award in 2014, the Letters and Science Philip R. Certain – Gary Sandefur Distinguished Faculty Award in 2013, and the NSF CAREER award in 2009.

Disclaimer: These posts are written by individual contributors to share their thoughts on the Computer Architecture Today blog for the benefit of the community. Any views or opinions represented in this blog are personal, belong solely to the blog author and do not represent those of ACM SIGARCH or its parent organization, ACM.